This title might not make a lot of sense, but it will I could not resist titling this post with an 'homage' to the naming of the project I am going to tell you a little about. Recently, I've been working on gesture detection with the Kinect sensor. Since I've (partially) completed my experimental evaluation of how multi-angle data affects the performance, I decided to shift gears a little bit. Mostly I avoided the topic of hand gesture recognition with the Kinect, arguing (correctly) that the sensor was not built with that purpose in mind. But, since I have relied on this sensor for so long, I wasn't giving up on it!

In order to get started, I've set up a simple target - recognize a "knob turning" gesture and be able to measure which way a person is turning a virtual knob. So, imagine that you are holding an arbitrary size knob or dial in mid-air and you can use that gesture to regulate some underlying value in a 'smart' object. For example, the brightness of a lightbulb or volume. Wouldn't it be cool to be able to carry a virtual volume dial with you everywhere in the room? Wearables can do this task, of course, and some will prefer a more tangible way of turning a crown on Apple Watch or some other 'mechanical' dial object. However, with the focus on 'walk-in' interactions, without any setup or auxiliary devices, mid-air camera-based depth detection, tracking, and recognition is the only way to go.

While I have a lot of experience with recognizing Discrete gestures, it was time to gain some with Progress gestures. Basically, those can tell you at which stage of the gesture person is currently at. In even other words - map the value of the gesture progress to the volume setting or some other setting. Here is what I mean visually:

Goal of the RFR Progress detection

Initial results proved positive - after a few rounds of data collection for both testing and training data sets - and a lengthy tagging process - I was able to achieve a respectable correlation ratio. Model's performance at one particular (and annoying) angle, as well as its overfitting rate, are still to be determined. So, this is work in progress!

Results of the correlation between "ground truth" (thick blue line), first model I trained (thin green line) and state-of-the-art model (thin blue line).

However, off the top of my head, I could think of at least one another method of heuristic detection. Unfortunately, I knew it perhaps would not be as accurate, so before doing it myself and evaluating machine-learning method and heuristic method side by side, I searched for other ways online - and I wasn't disappointed.

Enter the Project Prague. Developed by Microsoft Cognitive Services Labs, it is an experimental hand gesture detection SDK. Although they recommend Intel RealSense SR300 camera, Kinect V2 is also supported. I knew I must check it out, I knew it was the deal. Having 3 ways to detect hand gestures with the Kinect instead of having 0 is much better!

Project Prague is simple to install - just get an .msi file from their website, run it, wait for it to download its stuff and all is set. You'll get a new tray application called Gesture Discovery. This one will list all the available and registered Gestures in the Microsoft.Gestures.Service process. Essentially, here is a way to think about it - Gestures.Service is a background process into which your applications will tap into. DiscoveryClient - another name for that application in your tray - is just another application utilizing the Service. It even has a Geek View! Let's take a look at the Geek View then...

Gesture Discovery tray application. Hold on ... why no gestures are currently active? Something is wrong here - gotta go to the Geek View!

Geek View in action! Wait, why isn't the sensor detected? I am pretty sure I've plugged it in ...

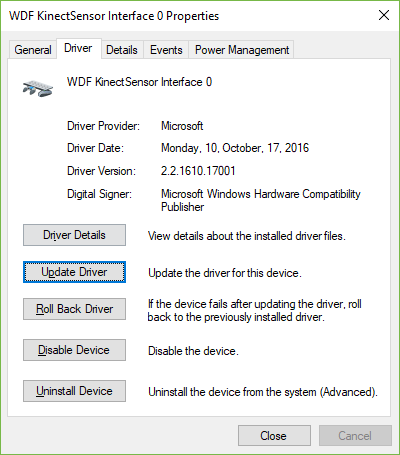

Geek View, obviously, shows that the sensor is not detected. Physically disconnecting it and connecting it back does nothing. A quick search online hints that this is due to an outdated Kinect driver. Well, this is simple enough to fix - go to the Device Manager, locate Kinect sensor devices -> WDF KinectSensor Interface 0 ->Properties. I've got the following window up:

Yeah, 2014 is a bit out of date ... let's try the automatic way first!

As always, I do try the automatic way first just in an effort to save myself some time. Automatic search found a new drive without any issues and immediately began downloading & installing it.

In this case, automatic download and install...

... worked quite well!

Here is what I've ended up with in terms of the driver version - 2016 is much more recent.

With this driver, all went fine, even without any reboots. Gesture Service detected by Kinect and began streaming images of IR stream. Guessing it was ready, I launched a Photos application, with a picture opened. Gestures Discovery kindly let me know that there is a gesture for this app which allows me to rotate an image by rotating my wrist - kind of like turning a knob! Alas, it only turns the image clockwise, but still, very similar to what I am trying to achieve here. While I hovered over the Rotate button in the Photos app, I got an interesting new tooltip.

A nice animation shows what you need to do to rotate this image

After this, everything just sort of worked so I've decided to make a video.

Pretty nice, right? Do not yet know why, but the infrared stream was really "jumpy" and would break away and drop frames for me so it wasn't particularly accurate, but more often than not it works okayish.

In my next post, I will show how you can add new gestures and integrate this into your Kinect application. Maybe I will also make a comparison between RFRProgress and Project Prague detection of the KnobTurn gesture. Of course, those will have to be of my own making, so their results will be purely objective, but I will try my best. Stay tuned.